Java Programming Techniques and APIs

Volatile Keyword in Java

Introduction

Has your gut ever warned you that this implementation might be flawed while you were working on an asynchronous activity or a multithreaded operation? However, you're unsure of what it would be. If so, then you have always had good instincts. As a result of the numerous gaps that could open up when doing operations using multiple threads. The use of multithreading involves a number of duties in addition to its strength and cleverness. Describe them. Here are a few of them to look at.

Great power entails enormous responsibility!

Yes, the adage mentioned above that applies to science fiction settings also applies to the real world.

As a result of multithreading, numerous threads can operate simultaneously on multiple CPUs, allowing for more responsiveness and optimized calculation. Despite being significantly better optimized for multiprocessor settings, multithreaded programs can nevertheless exist on a single processor machine. However, when working with multithreaded software, there are some flaws that a developer must be accountable for, regardless of the number of CPUs.

The "Visibility Problem" is one of the flaws that multithreading introduces. See what it is now:

- Let's assume that the boolean variable "flag" in the aforementioned example was initially set to "true."

- assuming that the two threads mentioned above are running on separate cores. (As a "Visibility Problem" on a multiprocessor environment is likely.)

- Say thread2 is set to execute first. The boolean flag is originally true based on the circumstances mentioned above. This thread will start the operation inside the while loop and continue until the flag is changed from true to false.

- Let's assume that thread 1 fires and changes the flag to false after a while.

- You might assume that since the flag is now false, the while loop will end its execution. However, that is untrue. While loop (thread 2) will continue to carry out its operation.

Yet how?

Let's look at what the RAM and JVM (Java Virtual Machine) are actually doing to help with that.

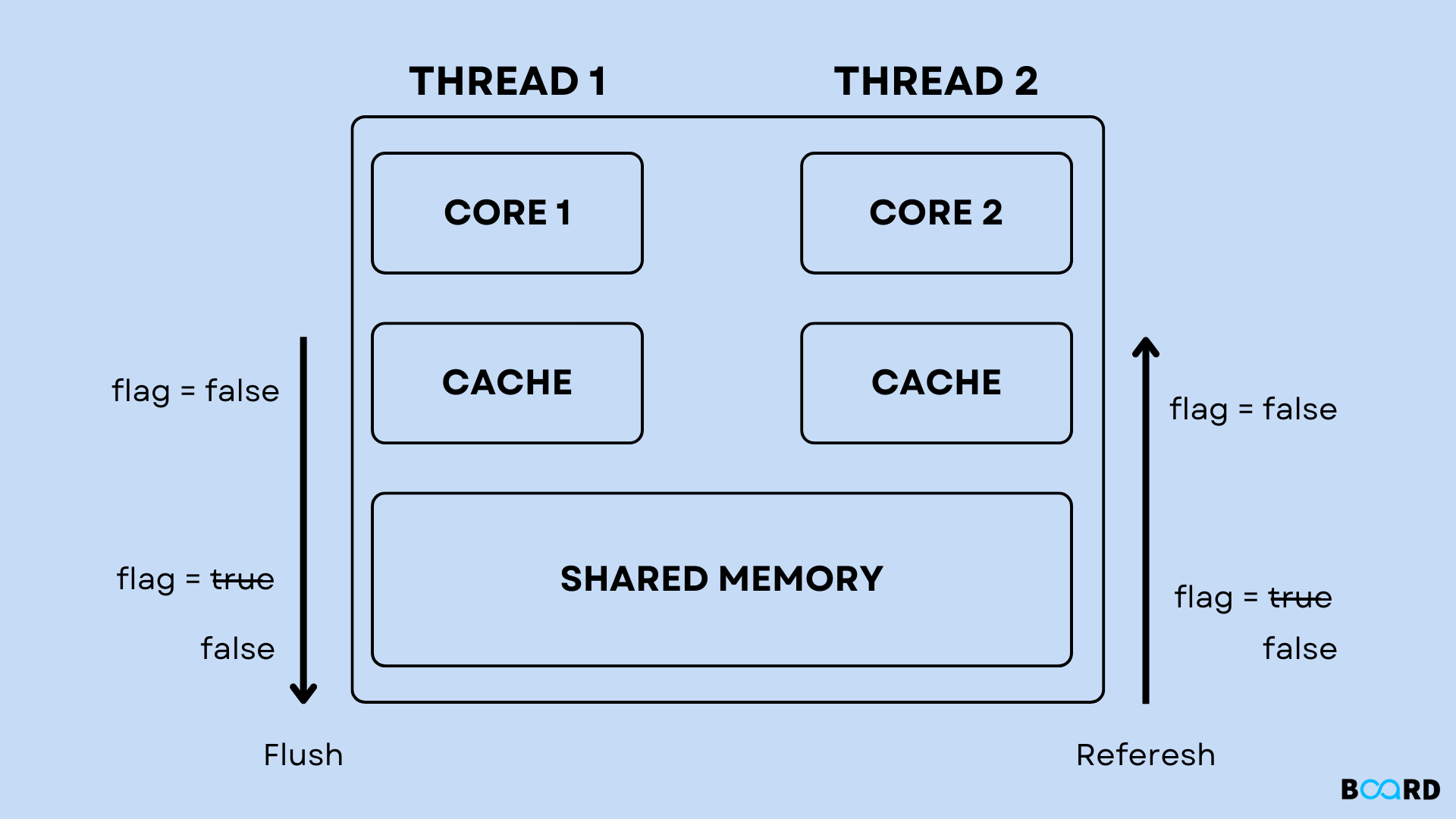

Since we thought about a multiprocessor environment, the next diagram has two cores (Core-1 & Core-2).

It is also referred to as the "local cache," each core has its own cache. Only the local cache of each core is accessible. The local cache for Core-1 and Core-2 is shown in the diagram below those cores.

- Since the boolean flag was initially set to true and is set to true in the shared memory, it is true. As was said above, Thread2 initiates its loop first, and it also has the flag set to true in its local cache.

- After some time has passed, thread1 (running on a different core) changes the value of the flag only in its local copy of the flag variable to false. This modification only affects its own cache; it has no effect on shared memory.

- Because the value of "flag" in thread2's core cache (core-2's cache) was NOT changed to false, thread2 will continue to run the process in its "while" loop. Up until this moment, the shared memory has not been updated to reflect the new status of the flag variable in Thread 1's cache.

- The Visibility Problem is exactly this. That merely suggests that changes made by thread 1 were not visible to thread 2 in the context stated above. But what is the answer? A: Vagrant Keyword! The issue can be resolved by just declaring the flag as "volatile." Therefore, the necessary syntax modification would be:

Now, thread2 will be able to see the modifications made by thread1. Being volatile will enable shared memory changes to be reflected in the local cache. Now that a volatile keyword has been added, it is evident from the figure below how changes are flushed from one cache to shared memory and then refreshed in another cache.

What makes anything volatile?

What this keyword actually signifies is that it solves the Visibility Problem!

Volatility is defined as having a tendency to shift quickly and unexpectedly, especially for the worse. And that's exactly what this keyword does—it quickly switches values between the shared memory and the cache.

Q: Will it actually function in every circumstance?

Do you know what "Synchronization" is?

Responsibility is not so simple, is it? Although multithreading is a fantastic feature, one must be aware of its flaws. The use of volatile keywords only addresses some of these.

What about issues like synchronization, though? Synchronization is one of the many combinations and permutations of how several threads can operate in a multithreaded application. It may not always be possible, perhaps in rarer permutations, but it is certainly a possibility.

Here's an example:

- Let's think about a scenario where we are unaware of how our JVM will schedule the threads when they are running on several cores. A scenario where thread1 "just" read the value of count and thread2 "just" read the value of count is conceivable.

- Currently, the value of count has been read by both threads to be 0.

- Then thread 1 updates the count to count+1, which makes the count equal to 1.

- However, thread 2 had already read count as 0, and it will now increase count by one based on the value it previously read, which was 0.

- Do you understand what took place? For the sake of clarity, let's quickly summarise. Assume that both threads will initially target the 'count++' line in the code above.

- However, the creator would have wanted thread 2 to update the count to 2. It didn't, though.

- This is due to Synchronization being the flaw in this situation. Synchronization was not achieved in this case via multithreading.

- Volatile is obviously not the answer because thread2 reads from its local cache that has been refreshed by sharedMemory earlier. However, it had already read the value of the count by this point.

Volatile is unable to perform thread-safe actions in this situation because the "++" operator is a compound operation rather than an atomic one.

Note:

- "Compound" denotes exactly what the "++" operator does. A compound is defined as "a mixture." It is evident from the steps below that the '++' is made up of incrementing and assigning operations. i.e. it requires reading, writing, AND updating.

- While atomic operations are defined as single operations that only involve read, write, or update.

- The'mixture' of several atomic actions is known as a compound operation.

We can use the "synchronized" approach to fix this issue:

- Any code that is written in a synchronized method block will only enable one thread to operate simultaneously in that block.

- 'synchronized' controls one thread at a time to achieve synchronization by taking one thread or instance at a time as a parameter.

Recall when to use synchronized and volatile

- Basically, when a variable is being read by numerous threads (atomic operation), but only one is changing or writing to it. Choose "volatile" because it will refresh each cache memory after reflecting the modifications made by that particular thread in the shared memory. Keep in mind that while "volatile" won't be thread-safe in the next case, it will be in this one.

- when a variable or variables are the subject of numerous read AND write operations (compound operation). Select "synchronized" to maintain the independence of each thread's operation, resulting in SYNC.

- Use volatile when performing atomic operations, as we saw above, as compound operations such as increment (the "++" operator) are not atomic. Therefore, synchronized operations in these situations will produce results that are accurate and thread-safe.

Conclusion

- Although multithreading is a strong operation that might save you time, you are still responsible for its execution.

- The 'Volatile' keyword, which ensures and maintains all the local cache of the cores in your application, can quickly fix visibility issues.

- With volatile, threads cannot be maintained blocked in the waiting state, but with "synchronized," threads can be held in the waiting state.

- Recognize that volatile variables are slower than regular variables and slow reading and writing because each step requires access to memory. Access to the cache memory is always quicker than access to RAM.

- You must decide whether volatile or synchronized will be used because each has unique use cases and restrictions.

Hope this explanation helps you understand a multithreaded action better the next time you implement it.